History

In 1960 UNIVAC built the Livermore Atomic Research Computer (LARC), today considered among the first supercomputers, for the US Navy Research and Development Center. It still used high-speed drum memory, rather than the newly emerging disk drive technology. Also among the first supercomputers was the IBM 7030 Stretch. The IBM 7030 was built by IBM for the Los Alamos National Laboratory, which in 1955 had requested a computer 100 times faster than any existing computer. The IBM 7030 used transistors, magnetic core memory, pipelined instructions, prefetched data through a memory controller and included pioneering random access disk drives. The IBM 7030 was completed in 1961 and despite not meeting the challenge of a hundredfold increase in performance, it was purchased by the Los Alamos National Laboratory. Customers in England and France also bought the computer and it became the basis for the IBM 7950 Harvest, a supercomputer built for cryptanalysis.

The third pioneering supercomputer project in the early 1960s was the Atlas at the University of Manchester, built by a team led by Tom Kilburn. He designed the Atlas to have memory space for up to a million words of 48 bits, but because magnetic storage with such a capacity was unaffordable, the actual core memory of Atlas was only 16,000 words, with a drum providing memory for a further 96,000 words. The Atlas operating system swapped data in the form of pages between the magnetic core and the drum. The Atlas operating system also introduced time-sharing to supercomputing, so that more than one program could be executed on the supercomputer at any one time. Atlas was a joint venture between Ferranti and the Manchester University and was designed to operate at processing speeds approaching one microsecond per instruction, about one million instructions per second.

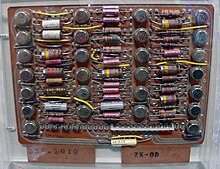

The CDC 6600, designed by Seymour Cray, was finished in 1964 and marked the transition from germanium to silicon transistors. Silicon transistors could run faster and the overheating problem was solved by introducing refrigeration to the supercomputer design. Thus the CDC6600 became the fastest computer in the world. Given that the 6600 outperformed all the other contemporary computers by about 10 times, it was dubbed a supercomputer and defined the supercomputing market, when one hundred computers were sold at $8 million each.

Cray left CDC in 1972 to form his own company, Cray Research. Four years after leaving CDC, Cray delivered the 80 MHz Cray-1 in 1976, which became one of the most successful supercomputers in history. The Cray-2 was released in 1985. It had eight central processing units (CPUs), liquid cooling and the electronics coolant liquid fluorinert was pumped through the supercomputer architecture. It performed at 1.9 gigaFLOPS and was the world's second fastest after M-13 supercomputer in Moscow.

Massively parallel designsedit

The only computer to seriously challenge the Cray-1's performance in the 1970s was the ILLIAC IV. This machine was the first realized example of a true massively parallel computer, in which many processors worked together to solve different parts of a single larger problem. In contrast with the vector systems, which were designed to run a single stream of data as quickly as possible, in this concept, the computer instead feeds separate parts of the data to entirely different processors and then recombines the results. The ILLIAC's design was finalized in 1966 with 256 processors and offer speed up to 1 GFLOPS, compared to the 1970s Cray-1's peak of 250 MFLOPS. However, development problems led to only 64 processors being built, and the system could never operate faster than about 200 MFLOPS while being much larger and more complex than the Cray. Another problem was that writing software for the system was difficult, and getting peak performance from it was a matter of serious effort.

But the partial success of the ILLIAC IV was widely seen as pointing the way to the future of supercomputing. Cray argued against this, famously quipping that "If you were plowing a field, which would you rather use? Two strong oxen or 1024 chickens?" But by the early 1980s, several teams were working on parallel designs with thousands of processors, notably the Connection Machine (CM) that developed from research at MIT. The CM-1 used as many as 65,536 simplified custom microprocessors connected together in a network to share data. Several updated versions followed; the CM-5 supercomputer is a massively parallel processing computer capable of many billions of arithmetic operations per second.

In 1982, Osaka University's LINKS-1 Computer Graphics System used a massively parallel processing architecture, with 514 microprocessors, including 257 Zilog Z8001 control processors and 257 iAPX 86/20 floating-point processors. It was mainly used for rendering realistic 3D computer graphics. Fujitsu's VPP500 from 1992 is unusual since, to achieve higher speeds, its processors used GaAs, a material normally reserved for microwave applications due to its toxicity. Fujitsu's Numerical Wind Tunnel supercomputer used 166 vector processors to gain the top spot in 1994 with a peak speed of 1.7 gigaFLOPS (GFLOPS) per processor. The Hitachi SR2201 obtained a peak performance of 600 GFLOPS in 1996 by using 2048 processors connected via a fast three-dimensional crossbar network. The Intel Paragon could have 1000 to 4000 Intel i860 processors in various configurations and was ranked the fastest in the world in 1993. The Paragon was a MIMD machine which connected processors via a high speed two dimensional mesh, allowing processes to execute on separate nodes, communicating via the Message Passing Interface.

Software development remained a problem, but the CM series sparked off considerable research into this issue. Similar designs using custom hardware were made by many companies, including the Evans & Sutherland ES-1, MasPar, nCUBE, Intel iPSC and the Goodyear MPP. But by the mid-1990s, general-purpose CPU performance had improved so much in that a supercomputer could be built using them as the individual processing units, instead of using custom chips. By the turn of the 21st century, designs featuring tens of thousands of commodity CPUs were the norm, with later machines adding graphic units to the mix.

Systems with a massive number of processors generally take one of two paths. In the grid computing approach, the processing power of many computers, organised as distributed, diverse administrative domains, is opportunistically used whenever a computer is available. In another approach, a large number of processors are used in proximity to each other, e.g. in a computer cluster. In such a centralized massively parallel system the speed and flexibility of the interconnect becomes very important and modern supercomputers have used various approaches ranging from enhanced Infiniband systems to three-dimensional torus interconnects. The use of multi-core processors combined with centralization is an emerging direction, e.g. as in the Cyclops64 system.

As the price, performance and energy efficiency of general purpose graphic processors (GPGPUs) have improved, a number of petaFLOPS supercomputers such as Tianhe-I and Nebulae have started to rely on them. However, other systems such as the K computer continue to use conventional processors such as SPARC-based designs and the overall applicability of GPGPUs in general-purpose high-performance computing applications has been the subject of debate, in that while a GPGPU may be tuned to score well on specific benchmarks, its overall applicability to everyday algorithms may be limited unless significant effort is spent to tune the application to it. However, GPUs are gaining ground and in 2012 the Jaguar supercomputer was transformed into Titan by retrofitting CPUs with GPUs.

High-performance computers have an expected life cycle of about three years before requiring an upgrade. The Gyoukou supercomputer is unique in that it uses both a massively parallel design and liquid immersion cooling.

Comments

Post a Comment