Distributed supercomputing

Opportunistic approachesedit

Opportunistic Supercomputing is a form of networked grid computing whereby a "super virtual computer" of many loosely coupled volunteer computing machines performs very large computing tasks. Grid computing has been applied to a number of large-scale embarrassingly parallel problems that require supercomputing performance scales. However, basic grid and cloud computing approaches that rely on volunteer computing cannot handle traditional supercomputing tasks such as fluid dynamic simulations.

The fastest grid computing system is the distributed computing project Folding@home (F@h). F@h reported 2.5 exaFLOPS of x86 processing power As of April 2020update. Of this, over 100 PFLOPS are contributed by clients running on various GPUs, and the rest from various CPU systems.

The Berkeley Open Infrastructure for Network Computing (BOINC) platform hosts a number of distributed computing projects. As of February 2017update, BOINC recorded a processing power of over 166 petaFLOPS through over 762 thousand active Computers (Hosts) on the network.

As of October 2016update, Great Internet Mersenne Prime Search's (GIMPS) distributed Mersenne Prime search achieved about 0.313 PFLOPS through over 1.3 million computers. The Internet PrimeNet Server supports GIMPS's grid computing approach, one of the earliest and most successfulcitation needed grid computing projects, since 1997.

Quasi-opportunistic approachesedit

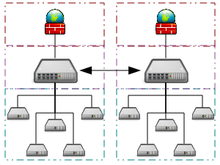

Quasi-opportunistic supercomputing is a form of distributed computing whereby the "super virtual computer" of many networked geographically disperse computers performs computing tasks that demand huge processing power. Quasi-opportunistic supercomputing aims to provide a higher quality of service than opportunistic grid computing by achieving more control over the assignment of tasks to distributed resources and the use of intelligence about the availability and reliability of individual systems within the supercomputing network. However, quasi-opportunistic distributed execution of demanding parallel computing software in grids should be achieved through implementation of grid-wise allocation agreements, co-allocation subsystems, communication topology-aware allocation mechanisms, fault tolerant message passing libraries and data pre-conditioning.

Comments

Post a Comment